“There are two kinds of creation myths: those where life arises out of the mud, and those where life falls from the sky. In this creation myth, computers arose from the mud and code fell from the sky.”

— George Dyson, “Turing’s Cathedral: The Origins of the Digital Universe”, New York: Random House, 2012.

“The DIME network architecture arose out of the need to manage the ephemeral nature of life in the Digital Universe”

— Rao Mikkilineni (2012)

Abstract:

The explosion of current cloud computing software offerings (both open-sourced and proprietary) to create public, private and hybrid clouds raises a question. Is it resulting in higher resiliency, efficiency and scaling of service offerings or increasing the complexity by introducing more components in an already crowded datacenter deploying myriad appliances, management frameworks, tools and people, all claiming to help lower total cost of operation? As the reliability, availability, performance, security and efficiency of the total system depends both on the number of components and their configuration, the architecture of a system plays an important role in defining the overall system resiliency, efficiency and scaling. We discuss current cloud computing architecture, the resulting complexity and investigate possible solutions using the self-organizing fractals theory and non-equilibrium thermodynamics. Evolution has taught us that when complexity increases, often, an architectural transformation occurs to lower the overall system entropy. Is a phase transition about to occur in our data centers seeded by the new many-core servers and high bandwidth communications?

Introduction:

According to Holbrook (Holbrook 2003), “Specifically, creativity in all areas seems to follow a sort of dialectic in which some structure (a thesis or configuration) gives way to a departure (an antithesis or deviation) that is followed, in turn, by a reconciliation (a synthesis or integration that becomes the basis for further development of the dialectic). In the case of jazz, the structure would include the melodic contour of a piece, its harmonic pattern, or its meter…. The departure would consist of melodic variations, harmonic substitutions, or rhythmic liberties…. The reconciliation depends on the way that the musical departures or violations of expectations are integrated into an emergent structure that resolves deviation into a new regularity, chaos into a new order, surprise into a new pattern as the performance progresses.” He goes on to explain exquisitely what “all that jazz” means and what it has to do with Dynamic Open Complex Adaptive System or DOCAS.

I borrow the jazz metaphor to understand the current state of affairs in cloud computing. Cloud computing started innocently enough as an attempt to automate systems administration tasks of computing systems to improve the resiliency (availability, reliability, performance and security), efficiency and scaling of services provided by web-hosting data centers. Before the advent of global web e-commerce enabled by broadband networks and ubiquitous access to high-powered computing, the workload fluctuations were not wild-enough to demand very fast response in provisioning to meet them. While enterprise datacenters were not pushed to deal with the wild fluctuations that some web-services companies were, companies such as Amazon, Google, Facebook, Twitter etc., dealing with uncertain (non-deterministic) workload fluctuations took a different approach to improve resiliency and scaling. They took advantage of the increased power in blade servers, high bandwidth networks and virtualization technologies to create virtual machine (VM) based systems administration with multiple VMs in a physical device consolidating workloads that are managed with dynamic resource provisioning. This has become known as cloud computing. Strictly speaking, VM is not essential for automation to improve scaling, auto-failover and live migration of applications and their data; and companies such as Google have chosen their own automation strategies without using VMs. On the other hand, many other enterprises have taken a more conservative approach by not adopting the cloud strategy and avoid the risk of impacting their highly tuned mission critical application availability, performance and security. They are probably correct given the continued occasional outages, security breaches and cost escalation in managing complexity with many public clouds.

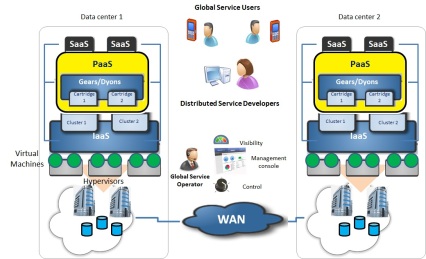

Amazon and Google went one step further by offering their flexible infrastructures to developers outside their company to rent the resources with which they could develop, deploy and service their own applications, thus unleashing a new class of developers. Startups could substitute OPEX for CAPEX to obtain the resources required for their new product and services development. Resulting explosion of applications and services has created a new demand for more clouds and more automation of systems administration to extend resiliency and provide a high degree of isolation from multiple tenants sharing resources while resolving the resulting contentions. The result is a complex web of Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) offerings to meet the needs of developers, service providers and service consumers. To be sure, these offerings are not independent. On the contrary, each layer influences the other in a complex set of interactions often in non-deterministic way based on workloads, business priorities and latency constraints. Figure 1 shows an example of these relationships.

Figure 1: Complex relationships of information flow between nested layers and information flows between components in each layer. The complexity is only compounded by multi-vendor offerings in each layer (not shown here)

The origin of complexity is easy to understand. While attempting to solve the issue of multi-tenancy and agility, the introduction of the virtual machines gives rise to another complexity of virtual image management and sprawl control. In order to address VM mobility issue, recent efforts to introduce application level mobility using other container constructs such as Gears, Cartridges etc., in the case of Redhat PaaS (or Dynos in the case of Heroku, the salesforce PaaS), introduce yet another layer of management of Gears and Cartridges (or Dynos). Another example is the Eucalyptus Infrastructure as a Service, which goes to great lengths to provide High Availability (HA) of the Infrastructure platform but fails to guarantee HA of applications. It is left to the applications to fend for themselves. These ad-hoc approaches to automate management have mushroomed the software required, increased the learning curve and made the operation and maintenance even more complex. While all platforms demonstrate drag and drop software with pretty displays that allow developers to easily create new services, there is no guarantee that if something goes wrong, one will be able to debug and find out where the root cause is. Or there is no assurance that when multiple services and applications are deployed on same platform, the feature interactions and shared resource management provided by a plethora of management systems designed independently will cooperate to provide the required reliability, availability, performance and security at the service level. More importantly, when the services cross server, data-center and geographical boundaries, there is no visibility and control of end to end service connections and their FCAPS management. Obviously, the platform vendors are only very eager to provide professional services and additional software to resolve the issues but without end to end service connection visibility and control that spans across multiple modules, systems, geographies and management systems, troubleshooting expenses could outweigh the realized benefits. What we need probably is not more “code” but an intelligent architecture that results in a synthesis of computing services and their management and a decoupling of end to end service connection and service component management from underlying resource (server, network and storage) management.

Self-organizing Fractals and Non-equilibrium Thermodynamics:

Fortunately, the self-organizing fractal theory (SOFT) and non-equilibrium thermodynamics (NET) (Kurakin 2011), provide a way to analyze complex systems and identify solutions. A very good glimpse into the theory can be found in the video (http://www.scivee.tv/node/4994). According to the SOFT-NET theory, the process of self-organization is scale-invariant and proceeds through sequential organizational state transitions, in a manner characteristic of far-from-equilibrium systems, with macrostructure-processes emerging via phase transition and self-organization of microstructure-processes. Once they have emerged as a result of an organizational transition, newborn structure-processes strive to persist and expand, growing in size/number, diversity, complexity, and order, while feeding on pre-existing energy/matter gradients. Economic competition among alternatively organized structure-processes feeding on the same energy/mater gradients leads to the elimination of economically deficient or inferior structure-processes and the improvement, diversification, and specialization of survivors, who are forced to fill and exploit all the available resource niches (the Darwinian phase of self-organization) (Kurakin 2007). Promoted by mutually profitable exchanges of energy/matter, the self-organization of specializing survivors (structure-processes) into larger scale structure-processes transforms (mostly) competing alternatives into (mostly) cooperating complements. As a result, Darwinian competition is transferred onto a larger spatiotemporal scale, where it commences among alternative organizations of self-organized survivors (the organizational phase (Kurakin 2007). Such an economy-driven, scale-invariant process of self-organization leads to the emergence of increasingly long-lived, multi-scale, hierarchical organizations (structures-processes) that expand over increasingly larger scales of space and time, feeding on available energy/matter gradients and eventually destroying them. Yet because energy/matter exists as a non-equilibrium system of interdependent gradients and conjugated fluxes of interconverting energy/matter forms, new gradients and fluxes are created and become dominant as old gradients and fluxes are consumed and destroyed. Such processes are responsible for the continuous birth, death, and transformation of energy/matter forms.

Obviously, cloud computing systems (or for that matter, distributed computing systems in general based on Turing machines) are not living organisms and thus are not susceptible to self-organization. However, if you substitute information to replace energy/matter, there are many similarities between the structure and dynamics of computing systems and living self-organizing systems. The nested computing layers, meta-stable organizational patterns (both macro- and micro- structures) in each layer, and process evolution through inter-layer interaction are the same features that contribute to self-organization. So one can ask what is missing for the cloud computing environments to become self-organizing. The answer lies in two observations:

- First one is the Gödel’s prohibition of self-reflection by computing elements that form the fundamental building block in the computing domain, the Turing machine (TM) (Samad and Cofer, 2001).

- Second one is the lack of scale invariant macro and micro structure-processes mentioned above for the organization of computing components and their management across various nested layers resulting from current ad-hoc implementation of computing processes using the serial von Neumann implementation of the Turing machine.

I have discussed both these deficiencies elsewhere (Mikkilineni 2011, 2012). The DIME network architecture proposed there attempts to address both these deficiencies.

The DIME Network Architecture:

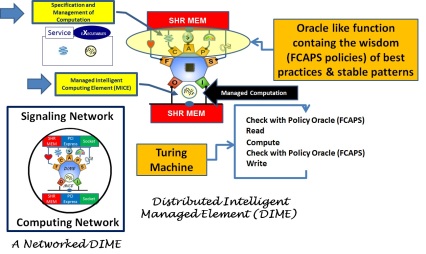

In its simplest form a DIME is comprised of a policy manager (determining the fault, configuration, accounting, performance, and security aspects often denoted by FCAPS); a computing element called MICE (Managed Intelligent Computing Element); and two communication channels. The FCAPS elements of the DIME provide setup, monitoring, analysis and reconfiguration based on workload variations, system priorities based on policies and latency constraints. They are interconnected and controlled using a signaling channel which overlays a computing channel that provides I/O connections to the MICE (or the computing element) (Mikkilineni 2011). The DIME computing element acts like a Turing oracle machine introduced in his thesis and circumvents Gödel’s halting and un-decidability issues by separating the computing and its management and pushing the management to a higher level. Figure 2 shows the DIME computing model.

Figure 2: The DIME Computing Model. For details on the different implementations of DIME networks (a LAMP stack without VMs and a native Parallax OS) visit http://www.youtube.com/kawaobjects

In addition the introduction of signaling in the DIME network architecture allows a fractal composition scheme of the DIME network to create a recursive distributed computing engine with scale invariant FCAPS management of the computing workflow at node, sub-network and network level. Figure 2 shows the comparison between living organisms with self-organizing fractal attributes and Cloud computing infrastructure organized to exhibit self-management fractal attributes.

Figure 3: Comparison of the nested hierarchical organization of living organisms and DIME network architecture.

Figure 3: Comparison of the nested hierarchical organization of living organisms and DIME network architecture.

While both models exhibit the genetic transactions of replication, repair, recombination and reconfiguration (Stanier and Moore, 2006) (Mikkilineni 2011), there is a fundamental difference between the two. The DIME network architecture is not self-organizing but it is self-managing based on initial policies and constraints defined at the root levels of the hierarchies. These policies can be modified during run time but only with the influence of agents external to the computing element whose behavior is under modification (at the DIME node, sub-network and network level).

At each level, the FCAPS management defines the initial conditions and policy constraints (meta-model if you will, denoting the context and defining the destiny of the ensuing process workflow) that will define the information flows and workflows executed by the DIME network downstream. The resulting metastable configurations are monitored and managed by the managers upstream. This model exhibits the three-step processes that provide self-management in living organisms – establish routine, monitor cues and respond with corrective action based on FCAPS parameters at every level. Figure 4 shows the metastable configuration entropy of the whole system. The FCAPS parameters monitored provide a measure of system entropy shown and the reconfiguration alters the state from higher entropy to lower entropy providing a “measure” of the stable pattern.

Figure 4: System Entropy as a function of time

The SOFT-NET theories provide a path to reexamine the way we design distributed computing systems. Perhaps the living organisms with their self-organizing properties could provide us a way to bring self-management to cloud computing configurations to improve resiliency, efficiency and scaling. The DIME network architecture is a baby-step to implement a recursive distributed computing engine to execute managed workflows that constitute hierarchical and temporal sequences of events executing business workflows.

The DIME network architecture raises some interesting questions about Turing machines and their management. How is it related to the Universal Turing Machine (UTM)? It is important to point out that I do not claim that DIME networks are the answer to Cloud computing vows or that the UTM can or cannot do what a DIME network does. While communicating Turing machines are modeled by a UTM (Penrose 1989), can the managed Turing machine networks also be modeled by the UTM? Is the scale-invariant organizational macro and micro structure-processes discussed in SOFT-NET theory essential for self-organizing systems? What are the differences between living self-organizing systems and self-managing networks? I leave this to the experts. I only point out that the DIME is inspired by the oracle machine discussed by Turing in his thesis and implements the architectural resiliency of cellular organisms in distributed computing infrastructure by introducing parallel management of both the computing elements and networks. While its feasibility has been demonstrated (Mikkilineni, Morana and Seyler, 2012), the DIME network architecture is still in its infancy and presents an opportunity on the eve of Turing’s centenary celebration to investigate its usefulness and theoretical soundness. Only time will tell if the DIME network architecture is useful in mission critical environments. Figure 5 shows a comparision of Physical server based computing, Virtual Machine based cloud computing and DIME network implementation in Linux server eliminating the Hypervisors and Virtual Machines.

Figure 5: Comparision between conventional, cloud and DIME network computing paradigms. The DIME network Architecture requires no Hypervisors, Virtual Machines, IaaS or PaaS. Linux processes are FCAPS managed and networked using a middleware library without any changes to the Operating System.

The DIME network architecture with its self-management, parallel signaling network overlay and its recursive distributed computing engine model supports all features that current cloud computing provides and more while eliminating the need for Hypervisors, Virtual Machines, IaaS and PaaS. The DNA offers the simplicity by providing FCAPS management of a Linux process through a middle-ware library using standard services of the Linux operating syatem and parallelism available in a multi-core/many-core processor.

Conclusion:

I conclude with one lesson from the past (Mikkilineni and Sarathy, 2009) I take away working in POTS (Plain Old Telephone System), PANS (Pretty Amazing New Services enabled by the Internet), SANs and Clouds. It is that wherever there is networking, switching always trumps other approaches. When services are executed by a network of distributed components, service switching and end-to-end service connection management are the ultimate meta-stable structure-processes and it seems that cellular organisms, telephone networks, and human network eco-systems have figured this out. Signaling and nested FCAPS management structure-processes seem to be the common ingredients. Therefore, I predict that eventually the data centers which are currently computing resource management centers will transform themselves into services switching centers just as in telephony. Perhaps computer scientists should look to telephony, neuroscience and organizational dynamics for answers than engaging in hackathons and coding ad-hoc complex systems to manage distributed computing resources. SOFT-NET theories seem to be pointing to the right direction. The solution may lie in discovering scale invariant micro- and macro structure processes that provide nested FCAPS management and self-managed local and global policy enforcement. Perhaps Holbrook’s “All that Jazz” metaphor is an appropriate metaphor for cloud computing research. Time may be ripe for the reconciliation (the synthesis of the thesis of implementing services and the anti-thesis of services management).

References:

Holbrook, Morris B. 2003. ” Adventures in Complexity: An Essay on Dynamic Open Complex Adaptive Systems, Butterfly Effects, Self-Organizing Order, Coevolution, the Ecological Perspective, Fitness Landscapes, Market Spaces, Emergent Beauty at the Edge of Chaos, and All That Jazz.” Academy of Marketing Science Review [Online] 2003 (6) Available: http://www.amsreview.org/articles/holbrook06-2003.pdf

Kurakin, A., Theoretical Biology and Medical Modelling, 2011, 8:4. http://www.tbiomed.com/content/8/1/4

Kurakin A: The universal principles of self-organization and the unity of Nature and knowledge. 2007 [http://www.alexeikurakin.org/text/thesoft.pdf ].

Mikkilineni, R., Sarathy, V., (2009), “Cloud Computing and the Lessons from the Past,” Enabling Technologies: Infrastructures for Collaborative Enterprises, 2009. WETICE ’09. 18th IEEE International Workshops on , vol., no., pp.57-62, June 29 2009-July 1 2009. doi: 10.1109/WETICE.2009.

Mikkilineni, R., (2011). Designing a New Class of Distributed Systems. New York,NY: Springer. (http://www.springer.com/computer/information+systems+and+applications/book/978-1-4614-1923-5)

Mikkilineni (2012) Turing Machines, Architectural Resilience of Cellular Organisms and DIME Network Architecture (http://www.computingclouds.wordpress.com )

Mikkilineni, R., Morana, G., and Seyler, I., (2012), “Implementing Distributed, Self-managing Computing Services Infrastructure using a Scalable, Parallel and Network-centric Computing Model” Chapter in a Book edited by Villari, M., Brandic, I., & Tusa, F., Achieving Federated and Self-Manageable Cloud Infrastructures: Theory and Practice (pp. 1-374). doi:10.4018/978-1-4666-1631-8

Penrose, R., (1989) “The Emperor’s New Mind: Concerning Computers, Minds, And The Laws of Physics” New York, Oxford University Press pp. 48

Samad, T., Cofer, T., (2001). Autonomy and Automation: Trends, Technologies, In Gani, R., Jørgensen, S. B., (Ed.) Tools in European Symposium on Computer Aided Process Engineering volume 11, Amsterdam, Netherlands: Elsevier Science B. V., p. 10

Stanier, P., Moore, G., (2006) “Embryos, Genes and Birth Defects”, (2nd Edition), Edited by Patrizia Ferretti, Andrew Copp, Cheryll Tickle, and Gudrun Moore, London, John Wiley & Sons

Leave a comment